For the past few years various news media have repeatedly conveyed the belief that:

- The mathematical abilities of Albertan and Canadian students have declined significantly.

- This decline was caused by the introduction of a new curriculum.

- Evidence is provided by the results of the PISA tests.

That sentiment is what this post is about, but let me start with this question.

At a certain junior high school the averages of the math grades for the past three years were 73%, 75%, and 73%. How would you rate this school?

- Definitely a top notch school.

- That’s a pretty good school.

- That’s what an average school should be like.

- Mediocre — room for improvement.

- Poor — I would think about sending my child to a different school.

Myself, I would choose (2). I would expect grades of 65% - 70% to be about average so I feel the school is a pretty good one. Not absolutely fantastic, but pretty good.

The real story:

The averages cited above are not for a particular school, but for an entire country. The figures are the average math scores for top notch Singapore in the 2009, 2012, and 2015 rounds of the PISA tests. (If you are familiar with the PISA tests, you know that the scores are not recorded as percentages. The numbers 73%, 75%, and 73% are what you get when you convert the published PISA scores in a reasonable way.1 )

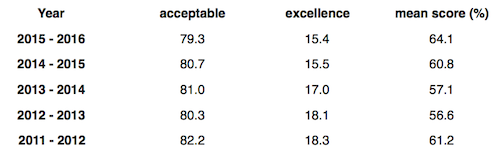

For comparison, here are the Alberta and Canadian results for the years from 2003 through 2015, again converted to percentages. (Singapore did not compete prior to 2009).

The variation across the PISA rounds for all three regions seems pretty unexceptional. I would expect changes of ±3% at the very least — the year-to-year variability in my university math classes were at least as large as that.

[As an aside, I predicted in a previous post that Canada would get a grade in the range from 65% to 67% on the math part of the 2015 PISA test. I promised a Trumpian gloat if the prediction was correct, and a Clintonian sob if it was wrong. No guru here, I’m afraid — it was pure luck — but "Gloat. Gloat" anyway.]

In spite of the stable relationship between the results for Alberta and Canada, there is alarm that Alberta's performance in the 2015 round has pushed them below the rest of Canada. That may be numerically accurate when you look at the raw scores published by PISA, but the results are so close that when converted to percentages the difference vanishes. And, more importantly, the PISA consortium itself reports that the difference is so minuscule that it is not statistically significant. Which means there is no reliable evidence that there is any difference whatsoever.

I don’t think that our ranking among the PISA participants indicates that our math curriculum is either superb or deficient. But using the PISA rankings to critique the curriculum is what people do. To confirm this, all you have to do is read the opening foray in the petition that stoked the current math wars in Alberta. Here is what it says:

"The Organization for Economic Co-operation and Development (OECD) recently released a report confirming that math scores, measured in 15yr olds, have declined significantly in the ten years since the new math curriculum was introduced across Canada."

Additionally, there is this report in the Globe and Mail in January 2014:

Robert Craigen, a University of Manitoba mathematics professor who advocates drilling basic math skills and algorithms, said Canada’s downward progression in the international rankings – slipping from sixth to 13th among participating countries since 2000 – coincides with the adoption of discovery learning.

So, what about that new curriculum?

The petition was presented to the Alberta legislature on Tuesday, Jan 28, 2014. (A later article gives a date of March 2014.)

So apparently the new curriculum was introduced a decade earlier, in 2004. However, that's not what happened — no students were taught the new curriculum prior to 2008 - 2009. To see just how far off the 2004 date is, let’s look at how the curriculum was actually phased in. Here is the schedule for the years that each grade was first exposed to the curriculum. (I trashed around a long time trying to find this. Thanks David Martin and John Scammell for providing me with the information. Since Albert Ed does not have this information on its website, I cannot be sure that Alberta actually followed the implementation schedule, but I'm assuming that was the case.)

The PISA exams take place every three years, and Canadian students take the tests in Grade 10. Just like David and John did in their 2014 posts2, I’ll save you some time and do the math so you can see when the students were first exposed to the new curriculum.

The curriculum changes could not be reflected in any way by the PISA results prior to 2012. And those who wrote the PISA test in 2012 were not exposed to the new curriculum in the elementary grades (which is where those who blame the new curriculum for our decline are pointing their fingers).

- Those who took the 2003 test - never exposed

- Those who took the 2006 test - never exposed

- Those who took the 2009 test - never exposed

- Those who took the 2012 test - first exposed in grade 7

- Those who took the 2015 test - first exposed in grade 4

The biggest drop in the PISA scores seems to have occurred in 2006, and the PISA grades since then have remained quite steady. What’s going on here? Did the introduction of the new curriculum in 2008 cause a drop in the PISA results in 2006? Is this is a brand new type of quantum entanglement? As you all know, with ordinary everyday quantum entanglement, changes in physical properties can be transmitted across great distances instantaneously.3 Yes, instantaneously, but never backwards through time. As someone might say, that would be really spooky!

Sorry for the sarcasm.

If you accept the PISA results as evidence of a decline of our students' math abilities, then those same results show that the decline occurred well before the new curriculum could have had any influence. And, this also means that the PISA results provide no evidence that returning to the previous curriculum will repair things.

Do the PISA scores matter?

By the way, if you are concerned about our sinking rank in the math PISA league tables, note that for the 2015 round, Canada moved up to 10th from the 13th place.

David Staples (a columnist for the Edmonton Journal) has said that the people who want to return to pre-2008 curriculum and teaching methods are not really concerned with our PISA rank (despite the comments quoted above). Their concern is that the proportion of lower performing students is increasing while the proportion of higher achieving students is decreasing.

Although that's true, and it might be serious, the decline started prior to 2015, when none of the students who took the PISA test were exposed to the new curriculum in elementary school. So, again, the PISA results are not evidence that the new curriculum is at fault. We'll have to go elsewhere to get evidence that it was caused by the new curriculum.

And if we can't find any, please let us not blame the front line workers and have this discussion degenerate into a round of teacher bashing.

REFERENCES

1 The conversion from the published PISA scores to percentages was done as follows:

Percent_grade = 60 + (Pisa_Score − 482) × (10 / 62.3).

More details can be found here .

2 David Martin's post about the 2012 PISA round is here, and John Scammell's is here.

3 Quantum entanglement — I'll let others explain it. Here are two you tube videos that do a pretty good job.

Quantum Entanglement & Spooky Action at a Distance

Yro5 - Entanglement

** post was edited Dec 14 to correct two typos